Fabric Access Policies enable communication of systems that are attached to the Cisco ACI fabric.

You build a fabric access policy with multiple configuration elements as:

- Pool: Defines a range of identifiers, such as VLANs

- Physical domain: References a pool. You can think of it as a resource container

- Attachable Access entity profile (AAEP): Reference a physical domain, and therefore specifies the VLAN pool that is activated on an interface.

- Interface policy: Defines a protocol or interface properties that are applied to interfaces.

- Interface policy group: Gathers multiple interface policies into one set and binds them to an AAEP.

- Interface profile: Chooses one or more access ports and associates them with an interface policy group.

- Switch Profile: Chooses one or more leaf switches and associates them with an interface profile.

VLAN Pool

A pool represents a range of

traffic encapsulation identifiers (For example: VLAN IDs, VNIDs, and multicast

address). A pool is a shared resource and can be consumed by multiple domains,

physical or virtual. A leaf switch does not support overlapping VLAN pools, so

you must not associate different VLAN pools with the same virtual domain.

When you’re creating a vlan pool

you must define the type of allocation which is used by the vlan pool. There

are two types:

Static Allocation:

- It requires the administrator to make a choice about which vlan will be used. This is used primarily to attach physical devices to the fabric.

- The EPG has a relation to the domain, and the domain has a relation to the pool. The pool contains a range of encapsulated VLANs and VXLANs. For static EPG deployment, the user defines the interface and the encapsulation. The encapsulation must be within the range of a pool that is associated with a domain with which the EPG is associated.

Dynamic Allocation:

- It means that ACI decides which vlan is used for a specific EPG. Most often you’ll see this when integrating with a hypervisor like VMware.

- In this case ACI defines the vlan that will be used (and will configure the portgroup on the hypervisor to use that specific vlan). This is ideal for situations in which you don’t care which vlan runs underneath the traffic, if it is mapped into the right EPG.

Note: For completeness, there are also VXLAN pools. You can use

these to attach to devices that support VXLAN. This could be your hypervisor.

Most fabrics only use Vlan pools. Be aware that they exist and that you could

use them if required.

Step to Navigate to Access Policies and create a VLAN pool for a physical domain:

Step: A: Navigate

- Click Fabric

- Click Access Policies

- Expand Pools by clicking the toggle arrow (>)

- Right-click on VLAN

- Click Create VLAN Pool

- Name the VLAN Pool: <User defined name>

- Ensure Static Allocation is selected

- Then click the plus sign (+) button to add your VLAN pool range

- VLAN Range: For example, 2900 – 2949

- Click Ok

- Name the VLAN Pool: <User defined name>

- Ensure Dynamic Allocation is selected

- Then click the plus sign (+) button to add your VLAN pool range

- VLAN Range: For example, 2950 – 2999

- Click Ok

Physical

Domain

A domain is used to define the scope of VLANs in the Cisco ACI fabric.

In other words, where and how a VLAN pool will be used.

Domains are used to map an EPG to a vlan pool. An EPG must be member of

a domain, and the domain must reference a vlan pool. This makes it possible for

an EPG to have a vlan encap.

There are several types of domains:

- Physical domains (physDomP): Typically used for bare metal server attachment and management access.

- Virtual domains (vmmDomP): Required for virtual machine hypervisor integration

- External Bridged domains (l2extDomP): Typically used to connect a bridged external network trunk switch to a leaf switch in the ACI fabric.

- External Routed domains (or L3 domains) (l3extDomP): Used to connect a router to a leaf switch in the ACI fabric. Within this domain protocols like OSPF and BGP can be used to exchange routes

- Fibre Channel domains (fcDomP): Used to connect Fibre Channel VLANs and VSANs

- Click Fabric

- Click Access Policies

- In the left navigation pane, all the way the bottom, expand Physical and External Domains by clicking the toggle arrow (>)

- Right-click on Physical Domains

- Click Create Physical Domain

- Name the Physical Domain: <User-defined Name>

- In the VLAN Pool dropdown, select your VLAN Pool created in the previous section

- Click Submit

- Click Fabric

- Click Access Policies

- In the left navigation pane, all the way the bottom, expand Physical and External Domains by clicking the toggle arrow (>)

- Right-click on L3 Domains and Click Create Layer 3 Domain

- Name the Layer 3 Domain: aci_p29_extrtdom

- Click Submit

Attachable Access entity

profile (AAEP)

The AAEP is another connector. It

connects the domain (and thereby the vlan and the EPG) to the Policy Group

which defines the policy on a physical port. When defining an AAEP you need to

specify which domains are to be available to the AAEP. These domains (and their

vlans) will be usable by the physical port.

Sometimes

you need to configure a lot of EPGs on a lot of ports. Say for example you’re

not doing any VMware integration, but you do need to have ESXi hosts connected

to your fabric. The old way of doing this was to create trunk ports and trunk

all the required vlans to the VMware host. In ACI you’d need to configure a

static port to the ESXi host on all EPGs that need to be available on the ESXi

host. If you’re not automating this, it could take a lot of work. Even with

automation this might be a messy way to do this.

That’s

why you can configure an EPG directly under the AAEP. This will cause every

port that will be member of the same AAEP to automatically have all the EPGs

defined at the AAEP level.

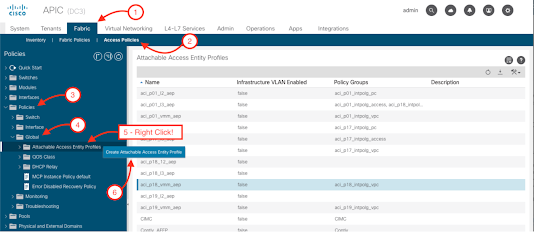

- Click Fabric

- Click Access Policies

- Expand Policies by clicking the toggle arrow (>)

- Expand Global by clicking the toggle arrow (>)

- Right-click on Attachable Access Entity Profiles

- Click Create Attachable Access Entity Profile

- Name the AEP: <User-defined Name>

- Click the plus button (+) to add a Domain

- In the Domain Profile dropdown, select your Physical Domain created in the previous section:

- Click Update

- Click Next

- Name the AEP: <User-defined Name>

- Click the plus button (+) to add an Domain

- In the Domain Profile dropdown, select your Layer 3 Domain created in the previous section:

- Click Update

- Click Next

Interface policy group

The Interface

Policy Group is a group of policies. These policies define the operation of the

physical interface. Think of stuff like the Speed of the interface, CDP

settings, BPDU settings, LACP and more.

This is

also the place where the AAEP is referenced. So, the Interface Policy Group

takes care of attaching the vlan, domain and EPG to an interface through the

AAEP.

The

specific policies are interface policies which are configured beforehand.

Step to Navigate to Interface Policy Groups to Create

Access Port Policy Group

- Fabric

- Access Policies

- Expand Interfaces by clicking the toggle arrow (>)

- Expand Leaf Interfaces by clicking the toggle arrow (>)

- Expand Policy Groups by clicking the toggle arrow (>)

- Right-click on Leaf Access Port

- Click Create Leaf Access Port Policy Group

- Name the Policy Group: <User-defined name>

- For the AEP, select aci_p29_l3_aep

- For Link Level Policy, select aci_lab_10G

- For CDP Policy, select aci_lab_cdp

- For LLDP Policy, select aci_lab_lldp

- For MCP Policy, select aci_lab_mcp

- For L2 Interface Policy, select aci_lab_l2global

- Scroll-down

- Click Submit

Similarly,

we can create Port Channel policy group that will be used as a Layer 2

connectivity policy that is part of a single node port channel. In ACI, each

policy group for either Port Channel or Virtual Port Channel identifies the

bundle of interfaces as a singular interface policy in the fabric.

Interface Profile/Selector

Interface

Profiles are the way the Policy Group is attached to a switch. Part of an Interface

Profile is the Interface Selector. The Interface selector specifies the

interfaces and attaches the policy to that specific interface. However, it does

not specify which switch(es) those interfaces belong to.

You can

have multiple interface selectors listed under a single Interface Profiles. It

depends on the way you like to work how you’re going to use them.

- Interface Profiles per switch

- Interface Profiles per policy group

The advantage of using a Interface

Profiles per policy group is that you can use consistent naming to map policy

groups to Interface profiles, making it easier to find the interface profile

where a policy group is attached to. However, if you have a lot of policy

groups, this could cause long lists in the GUI. This way of working is better

suited for automation when you’re working in large fabrics.

Step to

Create Interface Profiles

- Fabric

- Access Policies

- Expand Quick Start by clicking the toggle arrow (>)

- Right-click on Interface Configuration

- Click Configure Interface

- Access port Interface

- Port-channel Interface

- VPC Interface

Steps to Create Access Port Interface

- Set the Leafs to 203

- Set the Interfaces to 1/29

- Ensure the Interface Type is set to Individual

- In the dropdown, select your Leaf Access Port Policy Group you created earlier: aci_p29_intpolg_access

- The Leaf Profile Name will be aci_p29_access_sp

- The Interface Profile Name will be aci_p29_acc_intf_p

Steps to Create Port-channel Interface

- Set the Leafs to 205

- Set the Interfaces to 1/57-58

- Ensure the Interface Type is set to Port Channel (PC)

- In the dropdown, select your Port Channel Policy Group you created earlier: aci_p29_intpolg_pc

- The Leaf Profile Name will be aci_p29_pc_sp

- The Interface Profile Name will be aci_p29_pc_intf_p

- Click Next

- Set the Leafs to 207 - 208

- Set the Interfaces to 1/29

- Ensure the Interface Type is set to Virtual Port Channel (VPC)

- In the dropdown, select your VPC Port Policy Group you created earlier: aci_p29_intpolg_vpc

- The Leaf Profile Name will be aci_p29_vpc_sp

- The Interface Profile Name will be aci_p29_vpc_intf_p

- Click Next

Switch Profiles

A switch profile is the mapping between the policy model and the actual physical switch. The switch profile maps the Leaf Interface Policy, containing the interface selectors to the physical switch. So, as soon as you apply a Interface profile onto a switch profile it will program the ports according to the policy group you defined.

Step to

Create Switch Profiles

- Fabric

- Access Policies

- Expand Quick Start by clicking the toggle arrow (>)

- Expand the Switch policies

- Right-click on Profile to create switch Profile

- Configure Switch Profile Name and assign leaf switch and Interface profile to it created above

- Click Submit

Wrapping it

all up together

So, we’ve just read that all these policies in the end configure a port

with specific parameters. We’ve also read that the domain and the AAEP ensure

that an EPG can be programmed onto a port. But how does the ACI fabric know

which EPGs to put onto the port?

Several options exist. The most common ones are:

- Static configuration

- Dynamic configuration through VMM domains

Static configuration

As to

static configuration. You as an administrator configure static ports at the EPG

level. You need to define which port (or portchannel) to use and which encap

must be used. Encap in this context is usually a vlan tag but could in theory

also be a VXLAN or QinQ tag.

Another way is to attach an EPG directly onto the AAEP. This causes the EPG with the specified encap to be attached to all policy groups that are configured with this AAEP as described earlier.

Dynamic Configuration

The dynamic configuration based on VMM domains automatically created a port group in the virtual machine manager that corresponds to the EPG when the EPG is configured to be a member of the VMM domain.