How do I connect the APICs to the fabric?

To setup the Application Centric Infrastructure (ACI) Fabric, below task need to be done as:

- Rack and Cable the Hardware

- Configure each Cisco APIC's Integrated Management Controller (CIMC)

- Check APIC firmware and software

- Check the image type (NX-OS/Cisco ACI) and software version of your switches

- APIC1 initial setup

- Fabric discovery

- Setup the remainder of APIC Cluster

Rack and Cable the Hardware

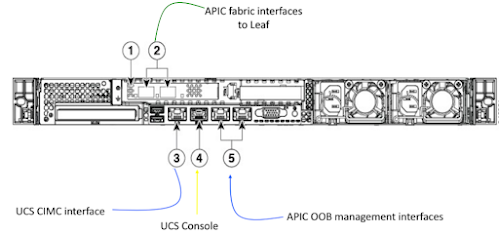

APIC Connectivity

The APICs will be connected to Leaf switches. When using multiple APICs, we recommend connecting APICs to separate Leafs for redundancy purposes.

For #9: If it's APIC M3/L3, VIC 1445 has four ports (port-1, port-2, port-3, and port-4 from left to right). Port-1 and port-2 make a single pair corresponding to eth2-1 on the APIC; port-3 and port-4 make another pair corresponding to eth2-2 on the APIC. Only a single connection is allowed for each pair. For example, you can connect one cable to either port-1 or port-2 and another cable to either port-3 or port-4, but not 2 cables to both ports on the same pair. All ports must be configured for the same speed, either 10G or 25G.

Switch Connectivity

All Leaf switches will need to connect to spine switches and vice versa. This provides your fabric with a fully redundant switching fabric. In addition to the fabric network connections, you'll also connect redundant PSUs to separate power sources, Management Interface to your 1G out-of-band management network, and a console connection to a Terminal server (optional, but highly recommended).

Configure each Cisco APIC's Integrated

Management Controller (CIMC)

When you first connect your CIMC

connection marked with "mgmt." on the Rear facing interface, it will

be configured for DHCP by default. Cisco

recommends that you assign a static address for this purpose to avoid any loss

of connectivity or changes to address leases.

You can modify the CIMC details by connecting a crash cart (physical

monitor, USB keyboard and mouse) to the server and powering it on. During the boot sequence, it will prompt you

to press "F8" to configure the CIMC.

From here you will be presented with a screen like below – depending on

your firmware version.

- For the "NIC mode" we recommend using Dedicated which utilizes the dedicated "mgmt." interface in the rear of the APIC appliance for CIMC platform management traffic.

- Using "Shared LOM" mode which will send your CIMC traffic over the LAN on Motherboard (LOM) port along with the APICs OS management traffic. This can cause issues with fabric discovery if not properly configured and not recommended by Cisco.

Aside from the IP address details, the rest of the options

can be left alone unless there's a specific reason to modify them. Once a static address has been configured you

will need to Save the settings & reboot.

After a few minutes you should then be able to reach the CIMC Web

Interface using the newly assigned IP along with the default CIMC credentials

of admin and password. It’s recommended

that you change the CIMC default admin password after first use.

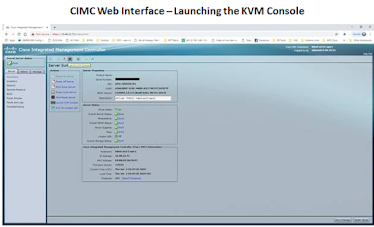

Logging

into the CIMC Web Interface

To log

into the CIMC, open a web browser to https://<CIMC_IP>. You'll need to

ensure you have flash installed & permitted for the URL. Once you've logged in with the default credentials,

you'll be able to manage all the CIMC features including launching the KVM

console.

Check APIC firmware and software

Equally important to note is that all your APICs require to run the same

version when joining a cluster. This may

require manually upgrading/downgrading your APICs manually prior to joining

them to the fabric. Instructions on

upgrading standalone APICs using KVM vMedia can be found in the "Cisco APIC Management, Installation, Upgrade, and Downgrade Guide" for your

respective version.

Switch nodes can be running any version of ACI switch image and can be

upgraded/downgraded once joined to the fabric via firmware policy.

Check the image type (NX-OS/Cisco ACI) and

software version of switches

For a Nexus 9000

series switch to be added to an ACI fabric, it needs to be running an ACI

image. Switches that are ordered as

"ACI Switches" will typically be shipped with an ACI image. If you have existing standalone Nexus 9000

switches running traditional NXOS, then you may need to install the appropriate

image (For example, aci-n9000-dk9.14.0.1h.bin).

For detailed instructions on converting a standalone NXOS switch to ACI

mode, please see the "Cisco Nexus 9000 Series NX-OS Software Upgrade and Downgrade Guide" on CCO for your respective version of NXOS.

APIC1 initial setup

Now that you have basic

remote connectivity, you can complete the setup of your ACI fabric from any

workstation with network access the APIC. If the server is not powered on, do

so now from the CIMC interface. The APIC

will take 3-4 mins to fully boot. Next thing we'll do is open a console session

via the CIMC KVM console. Assuming the APIC has completed the boot process it

should sitting at a prompt "Press any key to continue…". Doing so will begin the setup utility.

From here, the APIC

will guide you through the initial setup dialogue. Carefully answer each question. Some of the items configured can't be change

after initial setup, so review your configuration before submitting it.

Fabric Name: User defined, will be the logical friendly name of your fabric.

Fabric ID: Leave this ID as the default 1.

Number of Controllers in fabric: Set this to the number of APICs you plan to configure. This can be

increased/decreased later.

Pod ID: The Pod ID to which this APIC is connected to. If this is your first APIC or you don't have

more than a single Pod installed, this will always be 1. If you are located additional APICs across

multiple Pods, you'll want to assign the appropriate Pod ID where it's

connected.

Standby Controller: Beyond your active controllers (typically 3) you can designate

additional APICs as standby. In the

event you have an APIC failure, you can promote a standby to assume the

identity of the failed APIC.

APIC-X: A special-use APIC model use for telemetry and other heavy ACI App purposes. For your initial setup this typically would

not be applicable. Note: In future

release this feature may be referenced as "ACI Services Engine".

TEP Pool: This will be a subnet of

addresses used for Internal fabric communication. This subnet will NOT be exposed to your

legacy network unless you're deploying the Cisco AVS or Cisco ACI Virtual

Edge. Regardless, our recommendation is

to assign an unused subnet of size between /16 and /21 subnet. The size of the subnet used will impact the

scale of your Pod. Most customer

allocate an unused /16 and move on. This value can NOT be changed once configured.

Having to modify this value requires a wipe of the fabric.

Note: The 172.17.0.0/16

subnet is not supported for the infra TEP pool due to a conflict of address

space with the docker0 interface. If you must use the 172.17.0.0/16 subnet for

the infra TEP pool, you must manually configure the docker0 IP address to be in

a different address space in each Cisco APIC before you attempt to put the

Cisco APICs in a cluster.

Infra VLAN: This is another important item.

This is the VLAN ID for all fabric connectivity. This VLAN ID should be allocated solely to

ACI, and not used by any other legacy device in your network. Though this VLAN is used for fabric

communication, there are certain instances where this VLAN ID may need to be

extended outside of the fabric such as the deployment of the Cisco

AVS/AVE. Due to this, we also recommend

you ensure the Infra VLAN ID selected does not overlap with any

"reserved" VLANs found on your networks. Cisco recommends a VLAN smaller than VLAN

3915 as being a safe option as it is not a reserved VLAN on Cisco DC platforms

as of today. This value can NOT be changed once configured. Having to modify

this value requires a wipe of the fabric.

BD Multicast Pool (GIPO): Used for internal connectivity.

We recommend leaving this as the default or assigning a unique range not

used elsewhere in your infrastructure. This value can NOT be changed once

configured. Having to modify this value requires a wipe of the fabric.

Once the Setup

Dialogue has been completed, it will allow you to review your entries before

submitting. If you need to make any

changes enter "y" otherwise enter "n" to apply the

configuration. After applying the

configuration allow the APIC 4-5 mins to fully bring all services online and

initialize the REST login services before attempting to login though a web

browser.

Fabric discovery

With our first APIC fully configured,

now we will login to the GUI and complete the discovery process for our switch

nodes.

When logging in for the first time, you

may have to accept the Cert warnings and/or add your APIC to the exception

list.

Now we'll proceed with the fabric

discovery procedure. We'll need to

navigate to Fabric tab > Inventory sub-tab > Fabric Membership folder.

From this view you are presented with a

view of your registered fabric nodes.

Click on the Nodes Pending Registration tab in the work pane and we

should see our first Leaf switch waiting discovery. Note this would be one of the Leaf switches where the APIC is directly

connected to.

To register our first node, click on the first row, then from the

Actions menu (Tool Icon) select Register.

The Register wizard will pop up and

require some details to be entered including the Node ID you wish to assign,

and the Node Name (hostname).

Hostnames can be modified, but the

Node ID will remain assigned until the switch is decommissioned and remove from

the APIC. This information is provided

to the APIC via LLDP TLVs. If a switch

was previously registered to another fabric without being erase, it would never

appear as an unregistered node. It's

important that all switches have been wiped clean prior to discovery. It's a common practice for Leaf switches to

be assigned Node IDs from 100+, and Spine switches to be assigned IDs from

200+. To accommodate your own numbering

convention or larger fabrics you can implement your own scheme. RL TEP Pool is reserved for Remote Leafs

usage only and doesn't apply to local fabric-connected Leaf switches. Rack Name

is an optional field.

Once the registration details have been

submitted, the entry for this leaf node will move from the Nodes Pending

Registration tab to the Registered Nodes tab under Fabric Membership. The node will take 3 to 4 minutes to complete

the discovery, which includes the bootstrap process and bringing the switch to

an "Active" state. During the

process, you will notice a tunnel endpoint (TEP) address gets assigned. This will be pulled from the available

addresses in your Infra TEP pool (such as 10.0.0.0/16).

In depth, Fabric Discovery process:

First, Cisco APIC uses LLDP neighbor discovery to discover a switch.

After a successful discovery, the switch sends a request for an IP address via DHCP

Cisco APIC then allocates an address from the DHCP pool. The switch uses this address as a TEP address. You can verify the allocated address from shell by using the acidiag fnvread command and by pinging the switch from the Cisco APIC.

In the DHCP Offer packet, Cisco APIC passes the boot file information for the switch. The switch uses this information to acquire the boot file from Cisco APIC via HTTP GET to port 7777 of Cisco APIC.

The boot file HTTP GET 200 OK response from the Cisco APIC contains the firmware that the switch will load. The switch then retrieves this file from the Cisco APIC with another HTTP GET to port 7777 on the Cisco APIC.

At last, Cisco APIC initiates the encrypted TCP session when the switch is listening on TCP port 12183 to establish the policy element Intra-Fabric Messaging (IFM).

In summary, the initial steps of the discovery process are:

- LLDP neighbor discovery

- Cisco APIC assigns TEP address to the switch via DHCP

- The switch downloads the boot file from Cisco APIC and performs firmware upgrade if necessary.

- Policy element exchange via IFM, also known as intra-fabric messaging (IFM).

Note: Communication between

the various nodes and processes in the Cisco ACI Fabric uses IFM, and IFM uses SSL-encrypted

TCP communication. Each Cisco APIC and fabric node has 1024-bit SSL keys that

are embedded in secure storage. The SSL certificates are signed by Cisco Manufacturing

Certificate Authority (CMCA).

In the discovery

process, a fabric node is considered active when the Cisco APIC and the node can

exchange heartbeats through the IFM process.

Node status may fluctuate between several

states during the fabric registration process. The states are shown in the Fabric Node

Vector table. The APIC CLI command to show the Fabric Node Vector table acidiag

fnvread .

Following are the States and descriptions:

- Unknown – It states that Node discovered but no Node ID policy configured

- Undiscovered – It states that Node ID configured but not yet discovered

- Discovering – It states that Node discovered but IP not yet assigned

- Unsupported – It states that Node is not a supported model

- Disabled – when Node has been decommissioned, it will show Disabled

- Inactive – if you have No IP connectivity

- Active – When Node is active

Note: ACI uses inter-fabric messaging (IFM)

packets to communicate between the different nodes or between leaf and spine.

These IFM packets are typically TCP packets, which are secured by 1024-bit SSL

encryption, and the keys used for encryption are stored on secure storage.

These keys are signed by Cisco Manufacturing Certificate Authority (CMCA). Any

issues with IFM process can prevent fabric nodes communicating and from joining

the fabric.

After the first Leaf has been discovered and move to an Active state, it will then discovery every Spine switch it's connected to. Go ahead and register each Spine switch in the same manner.

Since each Leaf Switch connect to every

Spine switch, once the first Spine completes the discovery process, you should

see all remaining Leaf switch pending registration. Go ahead with Registering all remaining nodes

and wait for all switches to transition to an Active state.

With all the switches online &

active, our next step is to finish the APIC cluster configuration for the

remaining nodes. Navigate to System >

Controllers sub menu > Controllers Folder > apic1 > Clusters as Seen

by this Node folder.

From here you will see your single APIC

along with other important details such as the Target Cluster Size and Current

Cluster Size. Assuming you configured

apic1 with a cluster size of 3, we'll have two more APICs to setup.

Setup the remainder of APIC Cluster

At this point we would want to now open the KVM console for

APIC2 and begin running through the setup Dialogue just as we did for APIC1

previously. When joining additional

APICs to an existing cluster it's imperative that you configure the same Fabric

Name, Infra VLAN and TEP Pool. The

controller ID should be set to ID 2.

You'll notice that you will not be prompted to configure Admin

credentials. This is expected as they

will be inherited from APIC1 once you join the cluster.

Allow APIC2 to fully boot and bring its service online. You can confirm everything was successfully

configure as soon as you see the entry for APIC2 in the Active Controllers

view. During this time, it will also

begin syncing with APIC1's config. Allow

4-5 mins for this process to complete.

During this time, you may see the State of the APICs transition back

& forth between Fully Fit and Data Layer Synchronization in Progress.

Continue through the same process for APIC3, ensuring you assign the correct

controller ID.

This concludes the entire fabric discovery process. All your switches & controllers will now be in sync and under a single pane of management. Your ACI fabric can be managed from any APIC IP. All APICs are active and maintain a consistent operational view of your fabric.

The Complete steps of IFM (Intra-Fabric

Messaging)

After this all process is completed, the fabric is ready for Production

configuration.

- Link Layer Discovery Protocol (LLDP) Neighbor Discovery

- Tunnel End Point (TEP) IP address assignment to the node via DHCP

- Node software upgraded if necessary

- ISIS adjacency mode

- Certification Validation

- Start of DME Process on switches.

- Tunnel Setup (iVxlan)

- Policy Element IFM Setup

Fabric

Initialization Tasks

- Configure APIC1

- Add first Leaf to fabric.

- All all spines to fabric

- Add remaining Leaf’s to fabric.

- Add remaining APIC to fabric

- Setup NTP

- Configured OOB Management IP Pool

- Configure Export Policies for Configuration and Tech Support Exports

- Configure Firmware Policies (For Upgrades)

No comments:

Post a Comment