- DMVPN (Dynamic Multipoint VPN) is a routing technique we can use to build a VPN network with multiple sites without having to statically configure all devices.

- It’s a “hub and spoke” network where the spokes will be able to communicate with each other directly without having to go through the hub.

- Encryption is supported through IPsec which makes DMVPN a popular choice for connecting different sites using regular Internet connections.

- It’s a great backup or alternative to private networks like MPLS VPN.

- A popular alternative to DMVPN is FlexVPN.

- DMVPN is an overlay hub and spoke technology that allows an enterprise to connect its offices across an NBMA network.

A final note that must be iterated is that DMVPN is a

routing technique and is NOT a security feature. By default, any traffic sent

over DMVPN will be in clear text since GRE is used as the transport tunnel

however this traffic can be referenced in an IPSec transform-set and be

encrypted if you wanted.

There are four pieces to the DMVPN puzzle:

- Multipoint GRE (mGRE)

- NHRP (Next Hop Resolution Protocol)

- Routing (RIP, EIGRP, OSPF, BGP, etc.)

- IPsec (not required but recommended)

Multipoint GRE

Our “regular” GRE tunnels are

point-to-point and don’t scale well. For example, let’s say we have a company

network with some sites that we want to connect to each other using regular

Internet connections:

We have one router that represents

the HQ and there are four branch offices. Let’s say that we have the following

requirements:

- Each branch office must be connected to the HQ.

- Traffic between Branch 1 and Branch 2 must be tunnelled directly.

- Traffic between Branch 3 and Branch 4 must be tunnelled directly.

Thing will get messy quickly…we must

create multiple tunnel interfaces, set the source/destination IP addresses etc.

It will work but it’s not a very scalable solution. Multipoint GRE, as the name

implies allows us to have multiple destinations. When we use them, our picture

could look like this:

- When we use GRE Multipoint, there will be only one tunnel interface on each router.

- The HQ for example has one tunnel with each branch office as its destination.

- Now you might be wondering, what about the requirement where branch office 1/2 and branch office 3/4 have a direct tunnel?

- Right now, we have a hub and spoke topology.

- The cool thing about DMVPN is that we use multipoint GRE so we can have multiple destinations. When we need to tunnel something between branch office 1/2 or 3/4, we automatically “build” new tunnels, as seen in above figure.

When there is traffic between the branch offices, we can tunnel it directly instead of sending it through the HQ router. This sounds pretty cool, but it introduces some problems…

When we configure point-to-point

GRE tunnels, we must configure a source and destination IP address that are

used to build the GRE tunnel. When two branch routers want to tunnel some

traffic, how do they know what IP addresses to use?

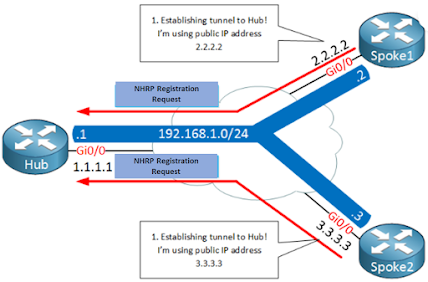

Above we have our HQ and two branch routers, branch1 and branch2. Each router is connected to the Internet and has a public IP address:

- HQ: 1.1.1.1

- Branch1: 2.2.2.2

- Branch2: 3.3.3.3

On the GRE multipoint tunnel interface, we use a single subnet with the following private IP addresses:

- HQ: 192.168.1.1

- Branch1: 192.168.1.2

- Branch2: 192.168.1.3

Let’s say that we want to send a ping from branch1’s tunnel interface to the tunnel interface of branch2. Here’s what the GRE encapsulated IP packet will look like:

The “inner” source and destination IP addresses are known to use, these are the IP address of the tunnel interfaces. We encapsulate this IP packet, put a GRE header in front of it and then we must fill in the “outer” source and destination IP addresses so that this packet can be routed on the Internet. The branch1 router knows its own public IP address but it has no clue what the public IP address of branch2 is…

To fix this problem, we need some

help from another protocol…

NHRP (Next Hop

Resolution Protocol)

We need something that helps our branch1 router figure out what the public IP address is of the branch2 router, we do this with a protocol called NHRP (Next Hop Resolution Protocol). Here’s an explanation of how NHRP works:

- One router will be the NHRP server.

- All other routers will be NHRP clients.

- NHRP clients register themselves with the NHRP server and report their public IP address.

- The NHRP server keeps track of all public IP addresses in its cache.

- When one router wants to tunnel something to another router, it will request the NHRP server for the public IP address of the other router.

Since NHRP uses this server and client’s

model, it makes sense to use a hub and spoke topology for multipoint GRE. Our

hub router will be the NHRP server, and all other routers will be the spokes.

Here’s an an illustration of how NHRP works with multipoint GRE:

Above we have two spoke routers (NHRP clients) which

establish a tunnel to the hub router. Later once we look at the configurations

you will see that the destination IP address of the hub router will be

statically configured on the spoke routers. The hub router will dynamically

accept spoke routers. The routers will use a NHRP registration request

message to register their public IP addresses to the hub.

- The hub, our NHRP server will create a mapping between the public IP addresses and the IP addresses of the tunnel interfaces.

- A few seconds later, spoke1 decides that it wants to send something to spoke2. It needs to figure out the destination public IP address of spoke2 so it will send a NHRP resolution request, asking the Hub router what the public IP address of spoke 2 is.

- The Hub router checks its cache, finds an entry for spoke 2 and sends the NHRP resolution reply to spoke1 with the public IP address of spoke2.

- Spoke1 now knows the destination public IP address of spoke2 and is able to tunnel something directly. This is great, we only required the hub to figure out what the public IP address is and all traffic can be sent from spoke to spoke directly.

When we talk about DMVPN, we often refer to an underlay and

overlay network:

- The underlay network is the network we use for connectivity between the different routers, for example the Internet.

- The overlay network is our private network with GRE tunnels.

NHRP is a bit like ARP or frame-relay inverse ARP. Instead of mapping L2 to L3 information, we are now mapping a tunnel IP address to a NBMA IP address.

DMVPN Phases

DMVPN has different versions which we call phases, there’s three of them:

- Phase 1

- Phase 2

- Phase 3

Phase 1

- DMVPN is the first phase that was defined when this technology was implemented by Cisco and is strictly designed for Hub and Spoke communications only.

- With phase 1 we use NHRP so that spokes can register themselves with the hub.

- The hub is the only router that is using a multipoint GRE interface, all spokes will be using regular point-to-point GRE tunnel interfaces.

- This means that there will be no direct spoke-to-spoke communication, all traffic has to go through the hub!

- Since our traffic has to go through the hub, our routing configuration will be quite simple.

- Spoke routers only need a summary or default route to the hub to reach other spoke routers.

- R1 is acting as the DMVPN hub for this network and is therefore the NHS for NHRP registration of the spokes.

- In phase 1 the GRE tunnels shown are multipoint GRE on the hub and point-to-point on the spokes.

- This forces hub and spoke traffic flows on the spokes.

The first two commands shown create a GRE tunnel and sets the VPN address and is nothing new to DMVPN configurations.

- no ip

redirects - Disables

ICMP Redirects on this interface. With DMVPN there can be cases where traffic

flows through the hub initially to reach the destination, if the next-hop

address isn't set as the hub this would cause an ICMP redirect message to be

sent. Disabling this will prevent excessive redirect traffic.

- no ip

split-horizon eigrp 1

- Disables split-horizon on the hub so routes from one spoke can be sent down

to another. Since EIGRP is always loop-free due to the feasibility condition

this will not cause a loop.

- ip nhrp

authentication -

This command requires spokes to authenticate with the hub before registration

or resolution requests can be made. This is optional however advised.

- ip nhrp

map multicast dynamic

- This command performs a static NHRP mapping on the hub that allows it to send

all multicast traffic (Including routing protocol hellos) to all dynamically

learned spokes.

- ip nhrp

map 100.64.0.1 10.1.1.1 - This command performs a static NHRP mapping on the hub that specifies

that the VPN address 100.64.0.1 maps to the physical address of 10.1.1.1 in the

underlying topology.

- ip nhrp

network-id - This

command specifies the ID of the DMVPN cloud. It's required to allow a router to

distinguish between each DMVPN network as more than one can be created on a

router.

- ip nhrp

nhs 100.64.0.1 -

This command specifies who the next hop server is on the network. Notice that

this is the VPN address and therefore a static mapping for this will be needed.

This is the reason for the mapping command earlier.

- ip

summary-address eigrp 1 0.0.0.0 0.0.0.0 - Send out a default summary route to spokes.

Since only hub and spoke traffic flows are allowed default routing can be done

via the hub to reduce the routing table information on spokes.

- tunnel

source GigabitEthernet1 - Specifies the source of the tunnel interface. The address of this

interface will be advertised in the registration message and should be

reachable via the spokes.

- tunnel

mode gre multipoint

- Specifies the interface as a multipoint GRE interface and that an explicit

destination doesn't need to be specified.

Next, a

brief review of the spoke configuration can be seen below:

The configuration of a spoke router is simpler with just the usual IP address configuration, NHS specification and mapping and authentication parameters required. The most noticeable difference is the explicit specification of the tunnel destination. By having point-to-point tunnels on the spokes, it forces a hub and spoke topology.

Phase 2

The

disadvantage of phase 1 is that there is no direct spoke to spoke tunnels. In

phase 2, all spoke routers use multipoint GRE tunnels, so we do have direct

spoke to spoke tunneling. When a spoke router wants to reach another spoke, it

will send an NHRP resolution request to the hub to find the NBMA IP address of

the other spoke.

- In DMVPN phase 2 when a spoke router wishes to communicate with another spoke router it will look at its routing table to determine the next-hop address.

- For R2 to reach R3's loopback address it needs to send the traffic to 100.64.0.3 which is located out the tunnel0 interface.

- Since this is

now a multipoint GRE interface, R2 will check it's NHRP cache to determine what

the underlying address of R3 is so underlying routing can occur. This can be

seen with show dmvpn.

- The final part on DMVPN phase 2 is to briefly look at the configuration changes made to enable this phase.

Starting with the hub tunnel

configuration:

The configuration changes made was the removal of the summary route as that would cause the next-hop address to become the hub and therefore cause the data-plane to flow through the hub.

- In addition to this was the disabling of changing the next-hop value for EIGRP as it propagates the traffic across the DMVPN.

- This is what controls spoke-to-spoke traffic flows.

- Each routing protocol implements this different, however, and therefore caution needs to be made.

- For example, in OSPF this is achieved by making the network type as Broadcast; iBGP doesn't change the next-hop by default anyway and eBGP can use third party next hop.

- Lastly, IS-IS can be configured by setting the network type of broadcast though care needs to be taken to ensure database synchronization is complete.

The configuration on the spokes:

- On the spokes the most noticeable change is the conversion of the tunnel from a point-to-point GRE tunnel to a multipoint GRE.

- This allows spoke-to-spoke traffic flows as data isn't forced to be sent to the hub.

- The other NHRP mapping command tells the spoke to send any multicast traffic to the hub router.

- This is to allow EIGRP neighbours to form as multicast traffic is then sent to 10.1.1.1 directly.

- Note that the hub address is referenced as the underlay address.

Phase 3

DMVPN Phase 3 is the final and most scalable phase in DMVPN

as it combines the summarisation benefits of phase 1 with the spoke-to-spoke

traffic flows achieved via phase 2. This phase works by having the Hub

summarise a default route or to summarise all spoke prefixes and then to enable

NHRP redirection messages. On the spokes you only need to enable NHRP shortcuts

for DMVPN phase 3 as routing will complete the rest. Therefore, DMVPN will be

using the same topology as before:

- On R2 the only entry in its routing table is a received default route from R1 with it being set as the next-hop.

- As we know, this would normally cause hub and spoke traffic flows as the next-hop points to the hub for the data plane.

- Therefore, when R2 wishes to ping the loopback on R3 (Spoke-to-spoke traffic flow) it will initially send the traffic up to the hub as that is what the routing table has suggested.

- Upon receipt of the packet R1 will realize that the destination is another spoke on the DMVPN network (Due to the destination being out the same DMVPN interface).

- Therefore, R1 will then send an NHRP redirect down to both R2 and R3 telling them that their source addresses are reachable by each other. This can be seen in R1's debug output.

- Upon receipt of a redirect message, R2 and R3 will conduct NHRP resolution requests with each other (R1 acting as the proxy) to resolve their NBMA Address with their VPN address.

- Upon completion, R2 will have two extra entries in its routing table recorded by NHRP.

- One is the destination network that was previously summarised, the second one is then the used for recursive routing to allow spoke-to-spoke traffic flows on the tunnel interface.

- This process then happens for each network and next-hop on the DMVPN network so only the information that is needed are on the spokes.

- As opposed to DMVPN phase 2 where all routing information was propagated.

- As with any DMVPN verification, traceroute reveals the direct spoke-to-spoke traffic flows.

Phase 3 configurations are relatively simple and normally

require summarisation and enabling of NHRP redirects. Let's review the hubs

configuration first...

Since we are using EIGRP we can issue a summary-address

command to force a default route to be advertised out to the spokes. Equally,

we only need to enable the sending of NHRP redirects. Also note that next-hop

self has been enabled again since phase 2 as NHRP redirects handle the next-hop.

Moving on to the spoke’s configuration...

On the spokes you just need enable NHRP shortcut which allows the spoke to accept any NHRP redirect information. Failure to input this would force hub and spoke traffic flows as the spokes would ignore any redirect messages sent to it.